Table of Contents

#Intro

Welcome to today’s webinar on how to gain back your velocity and achieve efficiency when working with Kubernetes with Lukas Gentele, Co-founder and CEO of Loft Labs.

Rahul: Good morning, good afternoon, good evening, wherever you are joining from the world; just a few housekeeping items before we get started. You can put your questions in the chat window on LinkedIn, and we will get to that mostly in the end, but also we will pop some questions in the middle. I’ll be your host, Rahul, I head the growth marketing here at Loft, and I’ll be in the background moderating the questions. So now over to you, Lukas.

#Presentation

Lukas: Thank you so much for the introduction. Let’s get this session started. I prepared a couple of slides, and as Rahul mentioned, I’m Lukas, the CEO of Loft Labs. We’re working on a whole bunch of open-source Kubernetes tools, including the vcluster, DevSpace, and a couple of other projects that we’re involved with, and, you know, velocity developer experience. Those are really important things. You know pretty much anyone in our journey who has to deal with code day to day.

So looking at Kubernetes, everybody’s like, you know Kubernetes is complex, Kubernetes may actually slow you down so how can we, you know, kind of get back to this very, very efficient workflow that we had pre-Kubernetes that’s essentially what today’s session is about to essentially go back a few years and let’s take a journey back down memory lane. A few years ago the world was a lot simpler, right?

#Slide 1:

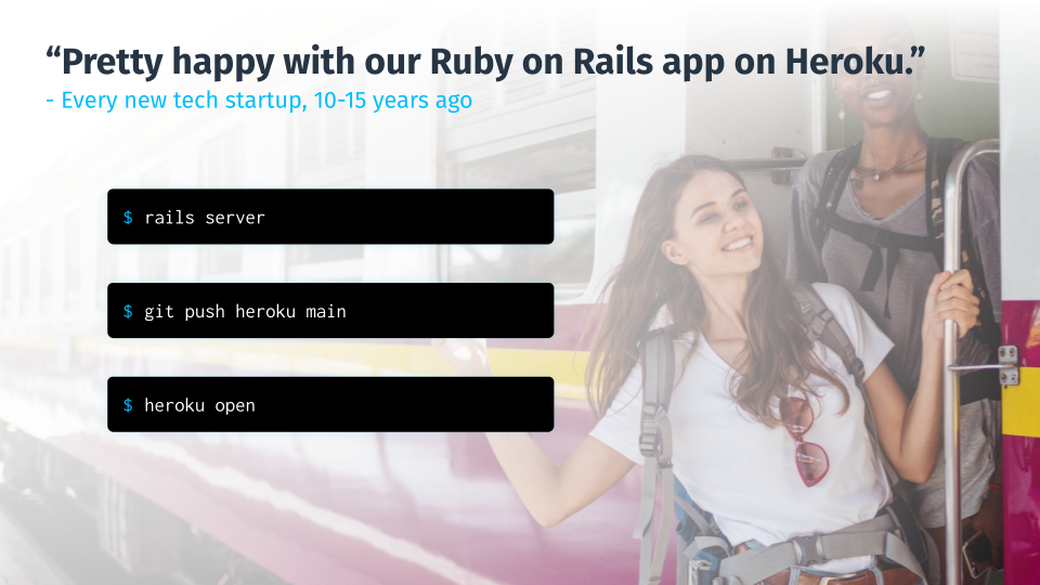

Every tech startup that was created like 10-15 years ago whether that was GitLab, GitHub, a lot of companies essentially bet on Ruby on Rails and they were super happy with that stack right because it was super efficient. It was easy for engineers to get up and running, and velocity is really key in business and then also obviously in tech to create business value back then it was super straightforward.

We essentially fired up a rail server with two words. It’s super straightforward starting this way and serving on our local machine and then if you wanted to deploy that we essentially just did a git push Heroku main these days, Heroku Master back then, to deploy a branch and then essentially we ran the command Heroku open to get a URL to access things in the browser, be able to test it, write some local scripts to test against our rest API or whatever we were building.

Things were a lot simpler back then and then a few years later complexity increased in terms of people serving global audiences and Cloud became a thing. Distributed computing essentially emerged for the masses.

#Slide 2:

Monolith was not a great option anymore, right? They really sucked and everybody was like we need microservices we need Kubernetes. Kubernetes started to emerge and you know every every Tech startup started in that cycle essentially saw the issues of the of the monolith and why they were betting on a different technology. Applications can have a couple of issues, they’re very tightly coupled so if you have a ruby application and you start out as a small startup that’s in that’s great right? Velocity is amazing, we can ship this pretty quickly, we can build new features and actually get that app out the door. Down the road we’re going to have like so many different use cases the platform that we’re building is expanding and ultimately this tight coupling turns into dependency hell because suddenly we have to have 10 people involved to figure out can we ship this new feature or will that break something else somewhere down the road in our monolith and that’s pretty problematic. Ultimately we have where we have a very very centralized application driven monolith that means we have stability concerns in terms of if we’re upgrading something. If we’re changing something, does that affect something else right?

Let’s just think about, you know, infamous database migrations that were underestimated and are super hard to roll back and effectively brought down entire internet services that thousands or even millions of people are using so that’s really problematic and then you have things like upgrades becoming very very complicated. That really slows down your release cycles, and that’s really not what we want. So, I guess we need a microservices, on Kubernetes right, so everybody was kind of like moving on the new hot train of segmenting monolith into different microservices, shipping them and containers using tools like Docker and Kubernetes to orchestrate our microservices in the cloud and that solves a lot of these concerns, but it introduces new concerns because microservice and Kubernetes may really slow you down.

#Slide 3:

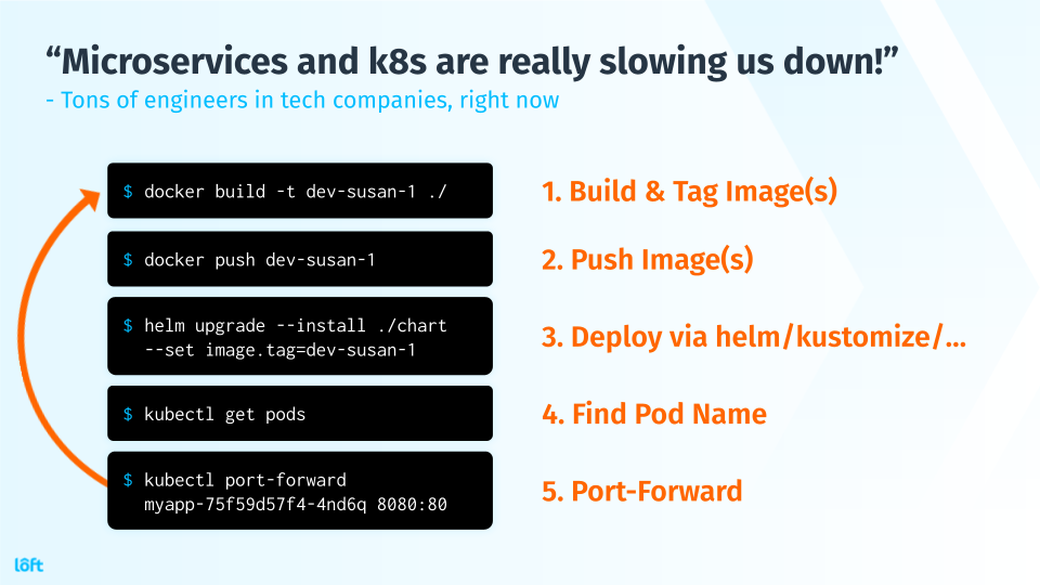

A lot of engineers actually are facing this right now, and so we’re talking about it in this session because when we’re thinking about our previous, you know, rails application. It was really like a real server to start it up, Heroku push to instantiate it. Heroku opened to get a URL if we’re thinking about the same workflow with containers.

Today if we’re doing it step by step, it’s essential we have to build and tag images. This command alone is a lot longer than a real server, and it’s just building and tagging an image. It doesn’t do much more, and we have to push an image to a registry. The part I didn’t include here is how do I even get the credentials for that registry? Where does the registry live? What is our tagging strategy? How do images get cleaned up again?

Well, there’s a whole bunch of complexity on that end then we got to deploy. Look at this blocky command right, here are two lines of code in the slides for this because yeah we gotta, you know, use Helm or use Kustomize and manifests. I’m sparing you the whole wall of YAML here but that’s essentially living under the hood here as well in that’s not very very simple and then if we want to now expose application access that application well the first step to doing anything seeing the logs opening in a browser or port forwarding etc.

It’s like finding the Pod name right because Kubernetes is distributed so we don’t really know like there’s no fixed name right? Our application is not called API server or something like that, our pod has some kind of suffix to it, so we gotta find that then copy it, then run a command like KubeCTL port forward. Well, guess what, if you want to make a change we have to start at the top again, and we can’t skip any of these steps because well we do need a new image we need to push that new image we need a Helm upgrade again to upgrade this and then we need to find the pod name again because the pod name will have changed because Kubernetes will not recycle the name of this pod and then we start our port forwarding again to this new part that has been creating Kubernetes. So, that’s not very very fast and efficient, right this is not our Heroku push, and it’s accessible for us, so when people see workflows like this in the past couple of years, there has been a new trend emerging, which is actually an old trend and that is to reply to complexity which we need to pass because pass that was Heroku right that was my first example.

#Slide 4:

It’s a repeating pattern in which we’re going to go into why this pattern will not be the ultimate solution in a second but let’s just take a look at the history of the PaaS because it’s very very interesting about creating this presentation. I was actually surprised that there’s a very very static cycle of PaaS being created after certain complexities have been introduced and then passes always see it seems like the solution right.

So in 2007, we had Heroku we had Google’s app engine emerged, so that was like the OG pass essentially being created Heroku is still the gold standard for or a successful PaaS today but then again, like a couple of years later, there was another wave of PaaS with AWS jumping on the train, and cloud Foundry being created for the new Cloud world to essentially easily provision clouds and large enterprises. Then there was the whole Docker container ecosystem happening in the Cloud, which ultimately kind of turned into Docker. Those PaaS, you know, came a couple of years later, and today you know, we had been seeing PaaS emerge based on Kubernetes to kind of solve the complexities, you know, not of containers, not of cloud but of Kubernetes.

Now, we have things like Google Cloud run, which try to be that PaaS layer on top, and there’s a whole bunch of others. The Rancher Founders just started the company to build a pass on Kubernetes. There’s a whole stream of the company’s open-source developers focused on building this new PaaS, so why is this pattern repeating? Every four years or so, why is everybody thinking well, we need to PaaS for this, right we need to layer this? It’s very interesting.

I think what we always see after a few years into the PaaS journey is people start hating that PaaS, and it’s interesting that repeats because I think PaaS have a couple of benefits and you know being opinionated actually is a huge benefit but by being opinionated a PaaS also really limits a user’s choices and that may be problematic because if Heroku doesn’t support the database that you need right so you know they have MySQL available right but you need something else you need a time series database well then you’re quickly hitting the limits of what your PaaS can do for your task. It’s also a lot of magic which obviously solves a lot of problems initially because you know the magic does things under the hood so you don’t have to you know invent them, think about them, configure them right and that’s great but having magic also really obstructs debugging.

It really hinders the user to dive deeper because we’re essentially just seeing a PaaS layer right we can’t really dig a lot deeper with a Heroku we don’t know what’s happening under the hood and that really impacts the capabilities of an engineer to debug things and dive deeper into what is actually what may be going wrong. How to optimize certain things, those things are very very limited and then you know PaaS ultimately means abstracting its layers of abstractions. Each PaaS adds its layer essentially and that creates kind of a lock-in. It may just be you know the case of Cloud Foundry maybe open source lock.

In the case of a Heroku would maybe a login into a SaaS platform but ultimately it’s a lock-in into the way of doing things. Then once you actually get to the point where you know that magic and the fact that the PaaS is so opinionated and limiting you in certain ways that login becomes problematic even if it’s open source login it is often problematic to have that login because you’re doing things a certain way and you really bound by the ways of your past so how can we solve this nevertheless?

#Slide 5:

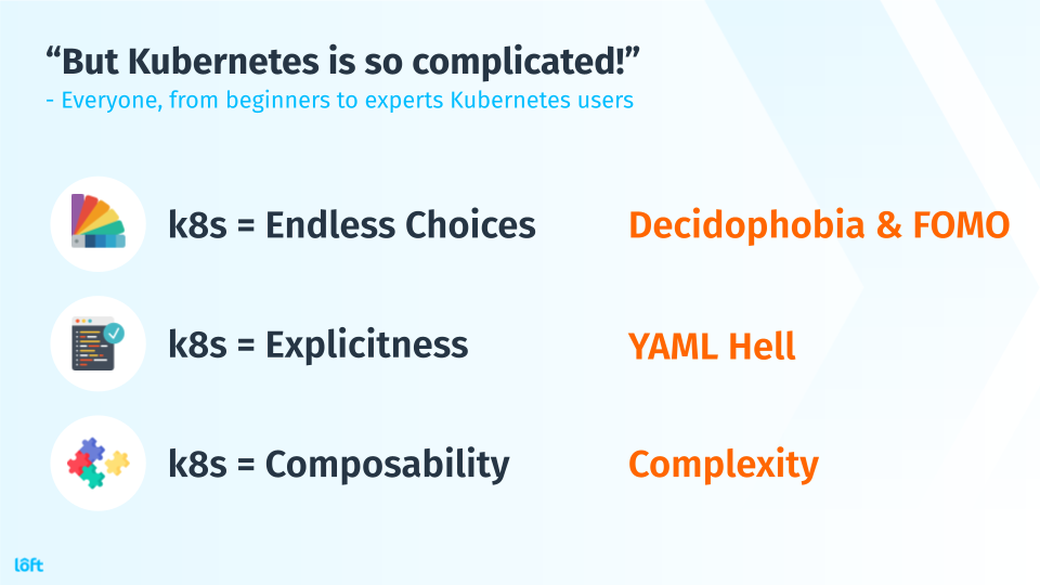

Kubernetes is complicated. Everyone from beginners to experts sees how we can actually get around Kubernetes being so complicated that is still a no-good question right? Kubernetes has endless choices in its ecosystem and that’s really great because you know like look at the CNCF landscape. Sparing you that image that you see in every single talk but there’s like thousands of tools there being created in the open source space and that creates two things - it’s very exciting because you want to try all these tools and you have a lot of possibilities that sound very great but it also leads to two things.

The first term is actually that was new to me is cynophobia. The fear of making a decision so you’re looking at like should I use Solo or should I use Istio or Linkerd. What am I using for a certain, you know what am I using for my service mesh or what am I using for my API Gateway? You’re looking at all of these tools and you’re like which one are we picking? I want to bet on the winning horse but which one is right? So you’re kind of holding off because you’re not going all in into one because you don’t you’re not sure yet which one is gonna get the broad Community adoption and get the 20,000 stars on GitHub. So you hold off on making that decision now that’s actually really problematic for business because we can you know just stand still we gotta move forward we got to ship new features we got to satisfy customer requests so the cynophobia is really a blocker in Tech when you have so many choices and you don’t make just a decision in a lot of cases.

It’s actually pretty ironic that it doesn’t matter which decision you make later on, you can always refactor things and you can always switch things over to a different Tech stack. I know so many companies that were on Docker swarm or mesosphere in the early days and then and you know Kubernetes won and well that just had to make a change towards Kubernetes.

Spotify gave an interesting talk about this at KubeCon. I think in what was it two-three years ago about them building their own orchestrator internally and releasing it the same day as Kubernetes was publicly announced and that was super unfortunate right but they still went with their technology for a few years because of just worked for them and at some point, they realized okay Kubernetes because it’s open source because it has so much backing in the community it’s actually outpacing what we can deliver internally and then they made the switch to Kubernetes and sure that was probably painful and there was probably a lot of work that went into you know switching from their Helios platform which was their internal tool to Kubernetes.

Ultimately that was possibly right and that’s a very fundamental change they made from the container orchestrator platform to another one but those things are possible. So, ultimately just making a call is better than not making a call. You’ve got to decide on something but there’s also another effect of so many choices and Kubernetes and that’s FOMO right?

You’re like oh there’s this new tool I gotta check it out right, oh there’s this new option right we gotta jump on this hype train right you have this fear of missing out on things when these new hypes are merging and that’s another thing that really is very very common in Kubernetes space.

Kubernetes is also perceived as very complicated because it is very explicit. I see that as a benefit because it is Kubernetes is not a lot of magic so it’s always clear what’s happening right and there’s always some kind of YAML option to tweak whatever you need to tweak for your specific scenario or use case but it leads to YAML hell.

Everybody knows this explicitness right we gotta state everything so that actually leads to complicated YAML manifests and you know really overloads people a little bit. Then Kubernetes is ultimately very composable. It’s not like a rails monolith that says like we’ll deal with all of this for you. It essentially lets you pick and choose for everything right so you pick your storage, you pick your networking, you pick your service mesh, you pick your API Gateway. All of these different things you’re not really bound by the framework or the specific tasks.

You have a lot of flexibility but that also leads to complexity because you have to decide how are these things working together so when you’re making these choices when you explicitly define what should be running you kind of need to wire things up in the right way to actually you know seamlessly work together and there are very few tools that help you.

You integrate things very very smoothly in the Kubernetes world so there’s some like work to do on that end for sure when we’re thinking about so how can we alleviate some of the pains here and how can we actually make Kubernetes a lot more approachable for engineers so that they don’t face all of this. A lot of companies have started doing platform engineering. A lot of teams within companies are concerned with things like developer velocity and developer experience now while building tooling for their internal teams to essentially have this like “The Golden PaaS” that teams can use to get a great experience and build features very very quickly.

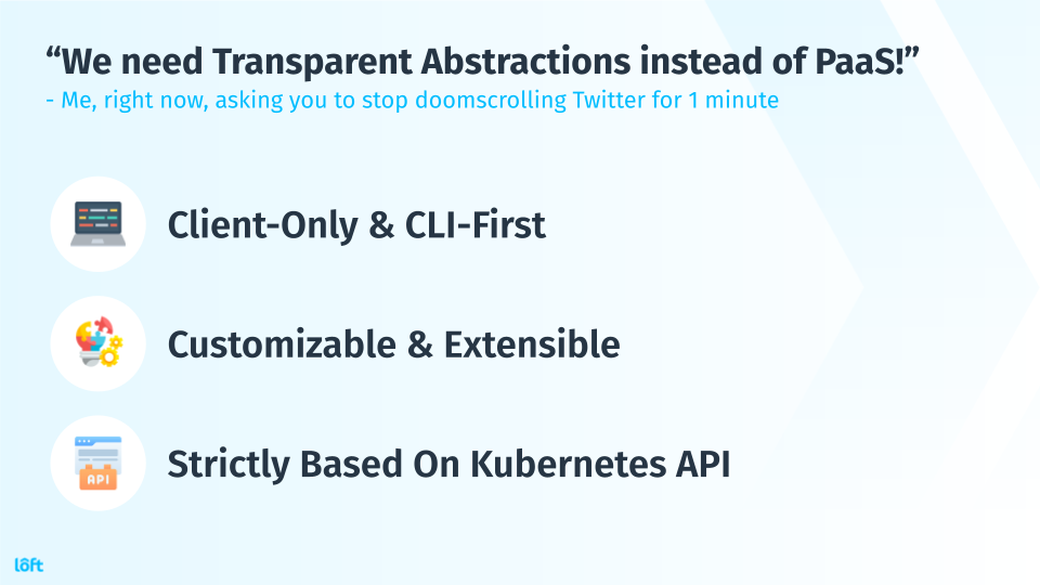

#Slide 6:

If you ask me, the biggest thing for these teams is that they should be looking at how can we build transparent abstraction rather than PaaS because you can build some kind of internal pass or you can build on top of things like Cloud Run but ultimately you’re locking your users in again into something. If you’re asking me I would actually argue transparent abstractions is what a lot of companies are looking for and in my opinion, that means we’re building something that is client-only so we’re not building this bulky PaaS that we’re sending requests to and it somehow handles the stuff for us magically under the hood building something client only that is based on kubectl.

We’re building something CLI first so engineers can use it in their terminal from VScode or whatever IDE they may prefer. We need to make sure that whatever we’re building on that end is customizable and extensible because ultimately every company and every team, every project within a company is different and whatever we’re doing needs to have that flexibility for folks to essentially build out their specific use case and I think one big benefit we have in the Kubernetes world is we have the Kubernetes API. That’s actually the huge benefit of Kubernetes, it standardizes the way of how to define things with this explicit YAML language or json actually under the hood being kind of pushed back and forth between kubectl and in the Kubernetes API server, and as long as we stick to that as long as we’re building something for our engineers it streamlines that workflow but essentially ties back to a kubectl command. If I’m able to run these commands via kubectl instead of the CLI that’s a transparent abstraction it means that I can use the tool to streamline certain things because it just combines certain commands, it executes them in a certain order, and it finds the right way to execute them but I can literally tell the tool and it’s even better if the tool can explain it to me.

You know, what’s the equivalent in kubectl or home or you know any other higher level tool based on Kubernetes so I can essentially say well if you run this command it under the hood runs these five commands for you and that’s really smart because it makes things understandable for engineers especially when they want to debug things and when they want to dive deeper. So we’re not hitting that PaaS limitation of like now we’re stuck right now, we’re stuck with our powers and we can’t get past that’s essentially what we’re preventing by betting on the Kubernetes API, and luckily and that’s you know towards the end of the slides and more towards the practical part of this webinar.

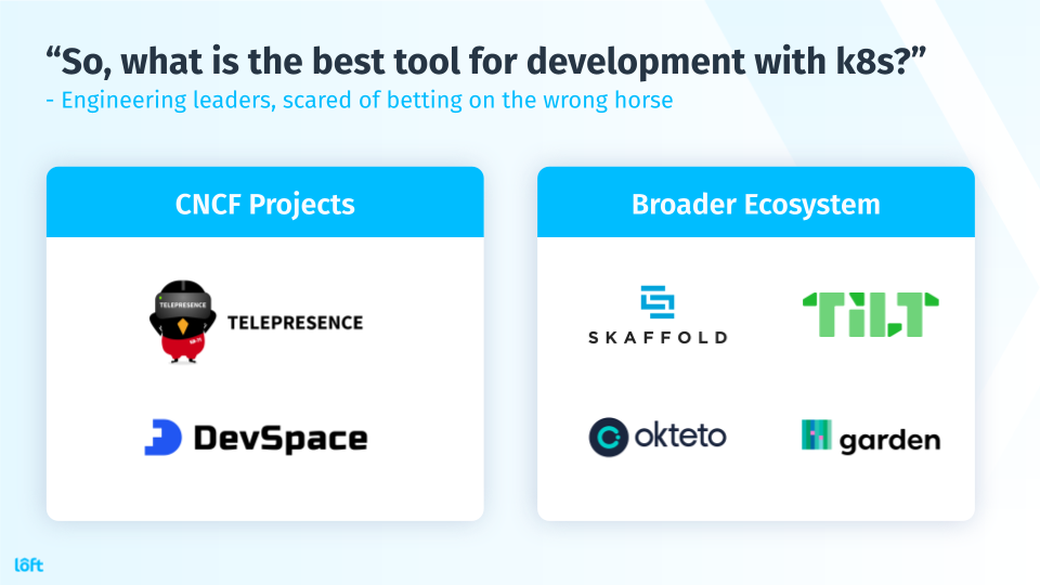

#Slide 7:

There are actually tools out there, if you’re asking me what is the best tool I would say that’s probably the wrong question to ask right because it really depends on what you’re looking for and the requirements you have for specific project team engineer culture right there’s so many factors to consider so I can’t give you a single answer to this but I can give you a couple of suggestions herein tools and to look into.

CNCF always has a great starting point there are two projects in the CNCF sandbox right now that you may want to consider telepresence they’ve been in the sandbox for years. They have the approach of “Hey you deploy your services”, how you deploy them we don’t really cover that piece right we don’t do image building we don’t do hub install right like any of these things are covered but we’re essentially deploying things. After it’s deployed we’re helping you now connect your localhost Dev environment to your remote deployed services so that you can run one service locally in any other microservices run inside the Kubernetes cluster and they do some network magic to essentially say hey your local service is now connecting with these remote services.

It’s a very interesting tool. It was a very early tool in the Kubernetes development world and in DevSpace that’s actually one of the tools we’ve been building DevSpace for a couple of years now. It is now in the sandbox in the CNCF and so I’m super excited to kind of list it here next to an amazing tool like telepresence DevSpace takes a little bit of different stance as telepresence. It has a broader scope so it also covers things like how do we deploy this application if you want to you can obviously tell that not to take care of that but if you wanted to cover things like efficient image building and then you know tagging pushing optionally as well including you know help install customizations.

However we’re standing up our 20 microservices DevSpace and cover that even dependencies across repositories and then it introduces unlike telepresence it doesn’t let you run a service locally on your laptop instead it runs everything inside the Kubernetes cluster but the services that you have an IDE window open for on your local machine that you’re working with they get hot reloaded that means without having to image build you actually can update the container in real-time and that introduces a whole lot of velocity because these commands I showed you earlier image building pushing, tagging, etc. that takes a lot of time with DevSpace we’re just entirely skipping that. We’re deploying the broad version of everything and then we’re hot-swapping things inside these containers we’re switching out binaries we’re switching out source code, we’re switching out static assets via a sync mechanism in an essentially hot reload.

These containers really are immediately up on any changes you make on your local file system and then we do things like port forward in the background we let you hook up your IDE via you know remote extension, for example, VScodes remote extension is pretty awesome so you can connect to these different lines if you want to you can use remote debuggers with them you know DevSpace supports all these all of these different things to really let you build that perfect developer experience for your team.

Then there’s a broader ecosystem of tools that I would encourage you to look into as well as scaffold, tilt, octet, or garden. Those are just a couple of tools outside of the CNCF projects that I would strongly urge you to have a look at. There are like 50 other tools here as well, unfortunately, I don’t have a ton of space on the slides I can’t list all of them but definitely have a look at you know I think the section is called app definition in microservice building I think on the CNCF landscape where you can see you know different other tools are in this space and yeah that’s essentially the end of my slides over here.

#DevSpace Demo and Questions

Check out the Video for the Demo and the Q/A