Virtual Clusters

In Loft, users can create virtual Kubernetes clusters which are fully functional Kubernetes clusters, but each vcluster runs inside a namespace of the underlying k8s cluster. It's cheaper than creating separate full-blown clusters and it offers better multi-tenancy and isolation than regular namespaces.

Virtual Clusters in Loft are based on the open source project vcluster. For more in-depth information, please take a look at the official vcluster docs.

Why Virtual Clusters?

Virtual clusters:

- are much cheaper than "real" clusters (shared resources and sleep mode just like for namespaces)

- can be created and cleaned up again in seconds (great for CI/CD or testing)

- allow to test different Kubernetes versions inside a single host cluster which may have a different version than the virtual clusters

- more powerful than simple namespace (virtual clusters allow users to use CRDs etc.)

- allow users to install apps which require cluster-wide permissions while being limited to actually just one namespace within the host cluster

- provide strict isolation while still allowing you to share certain services of the underlying host cluster (e.g. using the host's ingress-controller and cert-manager)

Workflows

Create Virtual Cluster

- UI

- CLI

- kubectl

Run this command to create a virtual cluster:

loft create vcluster [VCLUSTER_NAME] # optional flag: --cluster=[CLUSTER_NAME]

Creating a virtual cluster using the CLI will automatically configure a kube-context on your local machine for this vcluster.

With the kube-context of the vcluster, you can run any kubectl command within the virtual cluster:

kubectl get namespaces

Create the file vcluster.yaml:

apiVersion: storage.loft.sh/v1

kind: VirtualCluster

metadata:

name: [VCLUSTER_NAME] # REPLACE THIS

namespace: [VCLUSTER_NAMESPACE] # REPLACE THIS

spec:

helmRelease:

values: >-

virtualCluster:

image: rancher/k3s:v1.21.2-k3s1

extraArgs:

- --service-cidr=[VCLUSTER_SERVICE_CIDR] # REPLACE THIS

Create the virtual cluster using kubectl:

kubectl apply -f vcluster.yaml

Use Virtual Cluster

You can either use the Loft CLI to create a kube config automatically or you can create your own kube config with an Access Key.

- CLI

- Kube Config

Run this command to add a kube-context for the virtual cluster to your local kube-config file or to switch to an existing kube-context of a virtual cluster:

loft use vcluster # shows a list of all available vclusters

loft use vcluster [VCLUSTER_NAME] # optional flags: --cluster=[CLUSTER_NAME] --space [VCLUSTER_NAMESPACE]

Then, run any kubectl command within the virtual cluster:

kubectl get namespaces

If you need to construct a kube config directly, you'll need to create an Access Key first. Then create a kubeconfig.yaml in the following format:

apiVersion: v1

kind: Config

clusters:

- cluster:

# Optional if untrusted certificate

# insecure-skip-tls-verify: true

server: https://my-loft-domain.com/kubernetes/virtualcluster/$CLUSTER/$NAMESPACE/$VCLUSTER

name: loft

contexts:

- context:

cluster: loft

user: loft

name: loft

current-context: loft

users:

- name: loft

user:

token: $ACCESS_KEY

Replace the $ACCESS_KEY with your generated access key, $CLUSTER with the name of the connected kubernetes cluster the vcluster is running in, $NAMESPACE with the namespace the vcluster is running in and $VCLUSTER with the name of the vcluster.

Then run any command in the vcluster with:

kubectl --kubeconfig kubeconfig.yaml get ns

In order for a user to access a virtual cluster the user needs the RBAC permission get on the resource virtualclusters in the api group storage.loft.sh with api version v1 either in the namespace where the virtual cluster was created in or cluster wide

Delete Virtual Cluster

- CLI

- kubectl

Run this command to delete a virtual cluster:

loft delete vcluster [VCLUSTER_NAME] # optional flags: --cluster=[CLUSTER_NAME] --space [VCLUSTER_NAMESPACE]

Run this command to delete a virtual cluster using kubectl:

kubectl delete virtualcluster [VCLUSTER_NAME] --namespace [VCLUSTER_NAMESPACE]

Advanced Configuration

You can configure virtual clusters using the VirtualCluster CRD or using the Loft UI. Under the hood, Virtual clusters are deployed using a Helm chart (see the vcluster project on GitHub). You are able to change how the virtual cluster is deployed via the Advanced Options section in the Loft UI.

For more specific use cases and greater in-depth documentation, please take a look at the official vcluster docs.

Networking

By default, Services and Ingresses are synced from the virtual cluster to the host cluster in order to enable correct network functionality for the virtual cluster.

That means that you can create ingresses in your virtual cluster to make services available via domains using the ingress-controller that is running in the host cluster. This helps to share resources across different virtual clusters and is easier for users of virtual clusters because otherwise, they would need to install an ingress-controller and configure DNS for each virtual cluster.

Because the syncer keeps annotations for ingresses, you may also set the cert-manager ingress annotations to use the cert-manager in your host cluster to automatically provision SSL certificates from Let's Encrypt.

If you do not want ingresses to be synced and instead use a separate ingress controller within a virtual cluster, add the following syncer configuration in the virtual cluster advanced options:

syncer:

extraArgs: ["--disable-sync-resources=ingresses"]

Overriding virtual cluster defaults

You can define default values for virtual cluster creation through virtual cluster templates and assigning a template to a connected cluster as default template.

How does it work?

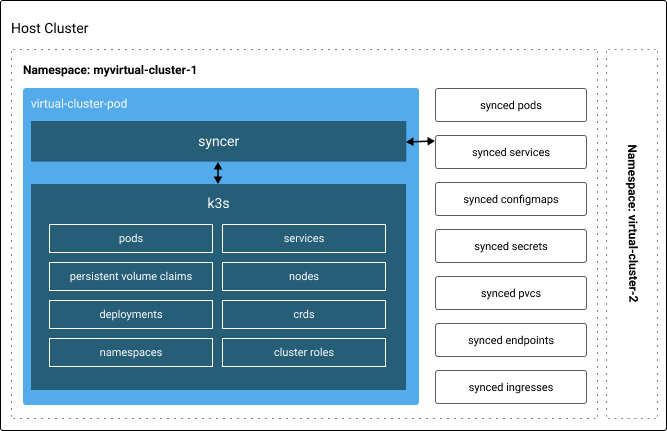

The basic idea of a virtual cluster is to spin up a functional but incomplete new Kubernetes cluster within an existing cluster and sync certain needed core resources between the two clusters. The virtual cluster itself only consists of the core Kubernetes components: API server, controller manager and etcd. Besides some core kubernetes resources like pods, services, persistentvolumeclaims etc. that are needed for actual execution, all other kubernetes resources (like unbound configmaps, unbound secrets, deployments, replicasets, resourcequotas, clusterroles, crds, apiservices etc.) are purely handled within the virtual cluster and NOT synced to the host cluster. This makes it possible to allow each user access to a complete own kubernetes cluster with full functionality, while still being able to separate them in namespaces in the actual host cluster.

A virtual Kubernetes cluster in Loft is tied to a single namespace. The virtual cluster and hypervisor run within a single pod that consists of two parts:

- a k3s instance which contains the Kubernetes control plane (API server, controller manager and etcd) for the virtual cluster

- an instance of a virtual cluster hypervisor which is mainly responsible for syncing cluster resources between the k3s powered virtual cluster and the underlying host cluster

Host Namespace

All Kubernetes objects of a virtual cluster are encapsulated in a single namespace within the underlying host cluster, which allows system admins to restrict resources of a virtual cluster via account/resource quotas or limit ranges. Because the virtual cluster uses a cluster id suffix for each synced resource, it is also possible to run several virtual clusters within a single namespace, without them interfering with each other. Furthermore it is also possible to run virtual clusters within virtual clusters.

Control Plane

The virtual cluster technology of Loft is based on k3s to reduce virtual cluster overhead. k3s is a certified Kubernetes distribution and allows Loft to spin up a fully functional Kubernetes cluster using a single binary while disabling all unnecessary Kubernetes components like the pod scheduler which is not needed for the virtual cluster because pods are actually scheduled in the underlying host cluster.

Hypervisor

Besides k3s, there is a Kubernetes hypervisor that emulates a fully working Kubernetes setup in the virtual cluster. Besides the synchronization of virtual and host cluster resources, the hypervisor also redirects certain Kubernetes API requests to the host cluster, such as port forwarding or pod / service proxying. It essentially acts as a reverse proxy for the virtual cluster.